ChatGPT takes physical form: AI now capable of direct human interaction

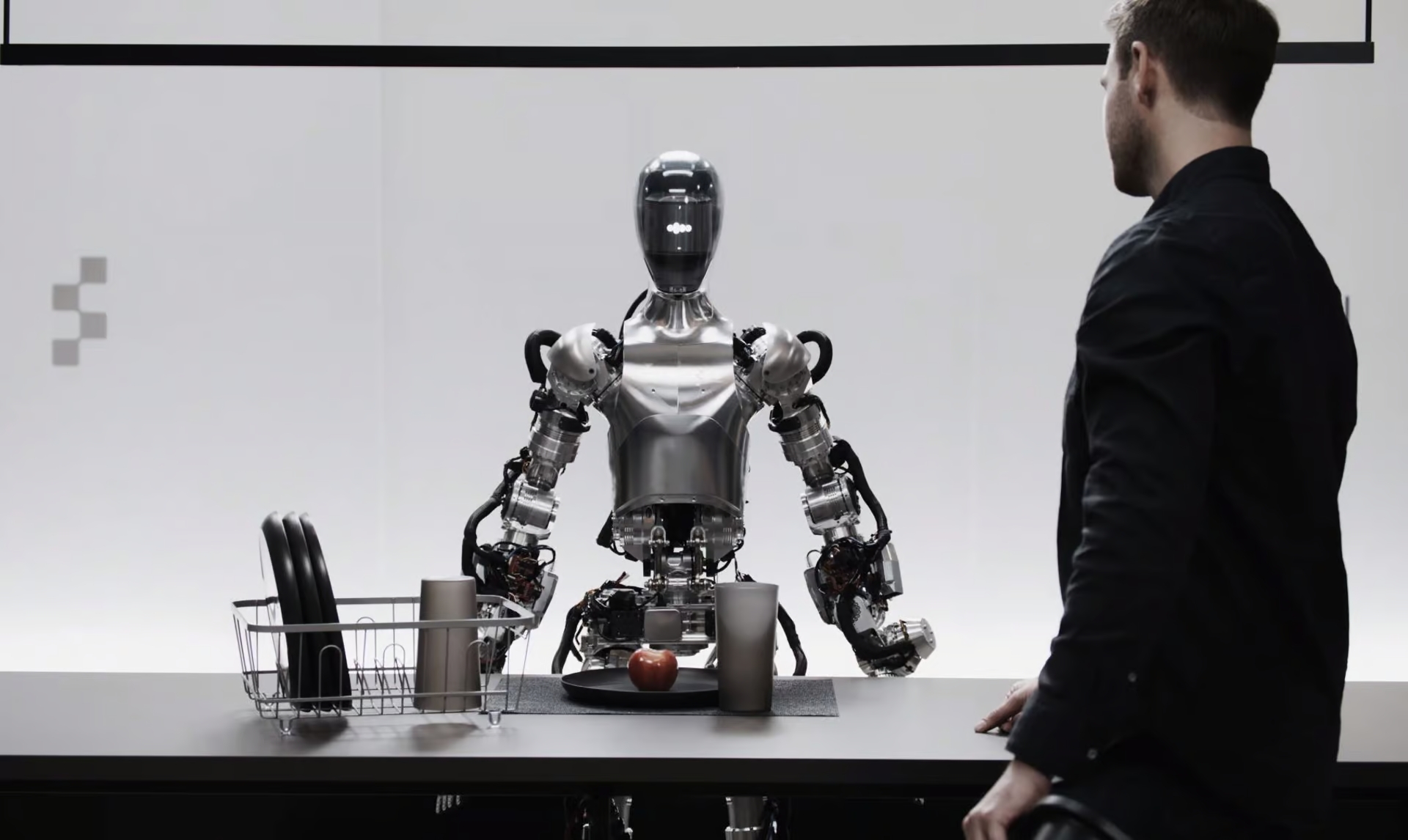

OpenAI unveiled a humanoid robot with built-in artificial intelligence (Photo: Pexels)

OpenAI unveiled a humanoid robot with built-in artificial intelligence (Photo: Pexels)

A startup from the USA called Figure has demonstrated the initial results of collaboration with OpenAI in expanding the capabilities of humanoid robots. In the video, their robot engages in real-time dialogue with a person, answering questions and following commands, according to one of the world's largest independent scientific and technical media outlets, New Atlas.

What we know about the new robot

The rapid progress of the Figure 01 project and the Figure company as a whole is truly exciting.

A year ago, the businessman and founder of the startup Brett Adcock emerged into the spotlight after the company caught the attention of major players in the robotics and artificial intelligence market, such as Boston Dynamics, Tesla Google DeepMind, Archer Aviation, and others. Their goal was to "create the world's first commercially viable general purpose humanoid robot."

By October of the same year, Figure 01 demonstrated its abilities in performing basic autonomous tasks and by the end of 2023 had gained the ability to learn various tasks.

By mid-January, the Figure company secured its first commercial contract for the use of Figure 01 at BMW's automotive plant in North Carolina, North America.

Last month, a video was released showing Figure 01 working in a warehouse. Shortly after, the company announced the development of the second-generation machine and declared a collaboration with OpenAI to create a new generation of AI models for humanoid robots.

On March 13th, Figure presented a video showcasing the first results of this collaboration.

The humanoid robot Figure 01 (photo: New Atlas)

The humanoid robot Figure 01 (photo: New Atlas)

Through his social media page on X (formerly Twitter), Adcock announced that the cameras embedded in Figure 01 transmit data to a large visual-linguistic AI model trained by OpenAI, while Figure's own neural networks also capture images of the surrounding environment at a rate of 10 Hz through the robot's cameras.

OpenAI algorithms are responsible for the robot's ability to understand human language, while Figure's neural network transforms the information flow into fast, low-level, and agile actions for the robot.

The head of Figure stated that during the demonstration, the robot was not controlled remotely, and the video was shown at real speed. "Our goal is to train a world model to operate humanoid robots at the billion-unit level," added the startup's leader. With such a pace of project development, there is not much time left until its completion.